LLMs for Public Narrative

This project has been a collaboration with Hannah Chiou, Danny Kessler, Maggie Hughes, Emily S. Lin, and Marshall Ganz. It was accepted to the 7th Workshop on Narrative Understanding at NAACL 2025 (Poole-Dayan* et al., 2025).

Abstract

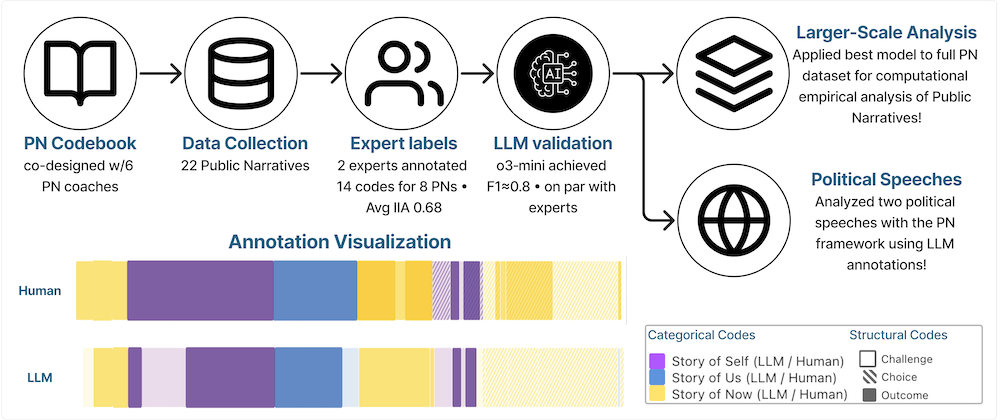

Public Narratives (PNs) are key tools for leadership development and civic mobilization, yet their systematic analysis remains challenging due to their subjective interpretation and the high cost of expert annotation. In this work, we propose a novel computational framework that leverages large language models (LLMs) to automate the qualitative annotation of public narratives. Using a codebook we co-developed with subject-matter experts, we evaluate LLM performance against that of expert annotators. Our work reveals that LLMs can achieve near-human-expert performance, achieving an average F1 score of 0.80 across 8 narratives and 14 codes. We then extend our analysis to empirically explore how PN framework elements manifest across a larger dataset of 22 stories. Lastly, we extrapolate our analysis to a set of political speeches, establishing a novel lens in which to analyze political rhetoric in civic spaces. This study demonstrates the potential of LLM-assisted annotation for scalable narrative analysis and highlights key limitations and directions for future research in computational civic storytelling.

Demo

Check out our interactive data visualization below or click here to view in a separate tab. Click “Select a story” to begin: